Are you ready to partner with a custom eLearning solution provider? Or are you still exploring your eLearning options in the research phase? Either way, you know that deciding whether to move forward with creating a custom eLearning solution is a big decision.

In our article What Does a SweetRush Custom eLearning Solution Cost?, we shared the factors that affect the cost of custom eLearning solutions and revealed what you can expect to pay for one hour of SweetRush custom eLearning.

In this article, we explain what you can expect when partnering with SweetRush and what’s included in the cost of a SweetRush custom eLearning solution. We introduce you to your dedicated SweetRush team by sharing their roles and responsibilities. We also walk through the project deliverables you can expect to receive. Finally, we share how we manage the cost of your custom eLearning solution throughout its development.

Want to refer back to this later? Download the article in full to read later or share with your team!

What Can You Expect from the SweetRush Team? ??

Curious about the roles and responsibilities of your dedicated SweetRush project team?

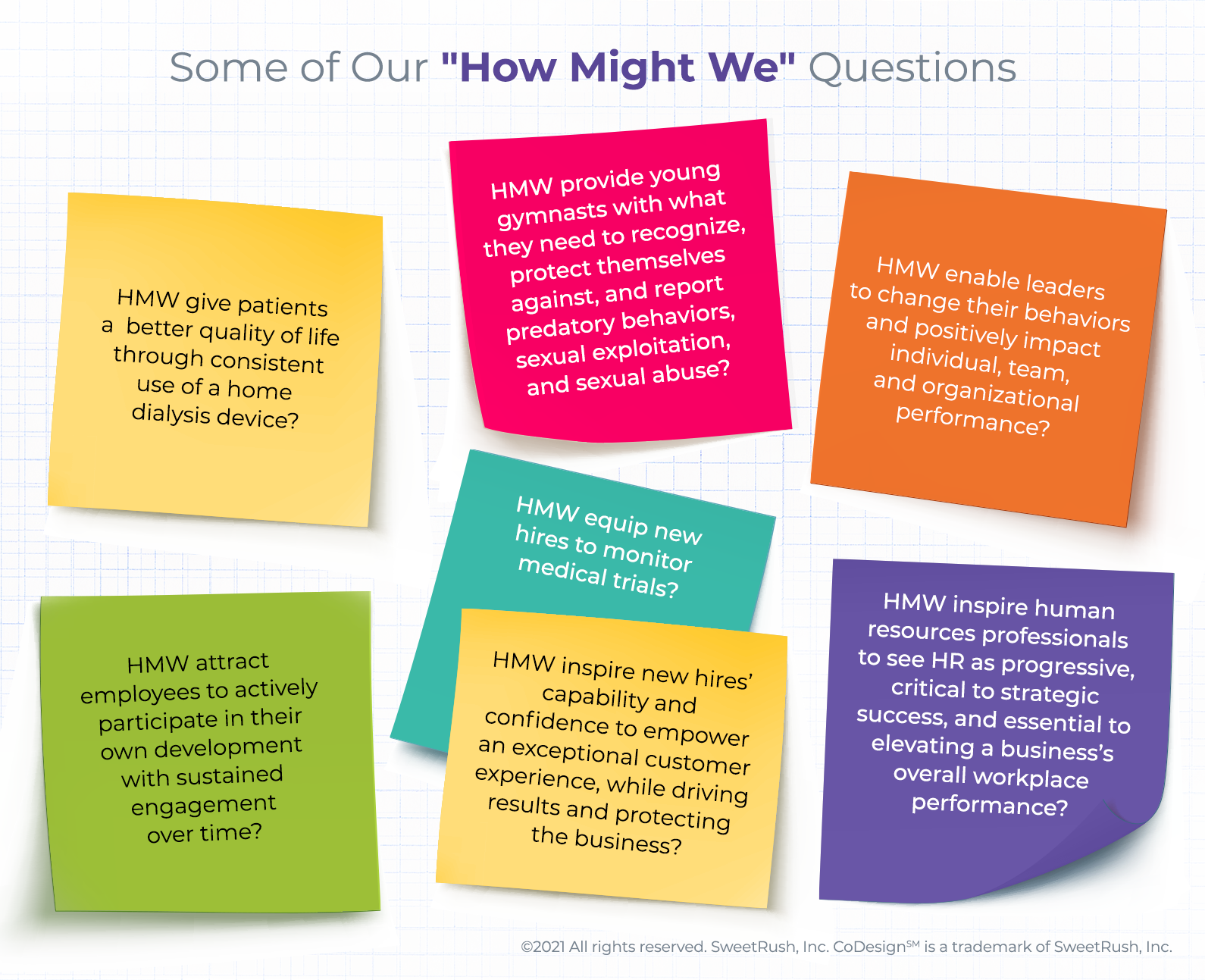

When you decide to embark on a custom eLearning project with SweetRush as your eLearning partner, you can expect to connect with an expert team of experienced and passionate professionals. From initial consultation to design to production to the final details, we partner and collaborate with you every step of the way.

Each project is assigned a hand-picked team, who you’ll meet in just a moment. Different roles and additional team members may be added depending on the scale and complexity of your project, or the technologies involved, but you can expect to have at least one of the team members listed here as a minimum. Regardless of what your final team looks like, you can rest assured that they’ll be working with you (face-to-face or or behind the scenes) to bring your eLearning dreams to life!

Consultation Phase

During your initial pre-sale consultation, you’ll meet the following folks.

Solution Architect

We don’t call them architects for nothing! These talented individuals will listen to your needs, understand and answer your questions, and dig in to fully grasp the scope of your project. They’ll make sure we have the right tools and people in place to provide a solution to match your eLearning needs. They will be your initial point of contact. In fact, if you decide to send us a request for proposal (RFP) during your custom learning partner search, the Solution Architects are the folks who’ll answer your questions.

We don’t call them architects for nothing! These talented individuals will listen to your needs, understand and answer your questions, and dig in to fully grasp the scope of your project. They’ll make sure we have the right tools and people in place to provide a solution to match your eLearning needs. They will be your initial point of contact. In fact, if you decide to send us a request for proposal (RFP) during your custom learning partner search, the Solution Architects are the folks who’ll answer your questions.

Design and Development Phase

If, after the initial consultation, you decide to move forward with us, we’ll assign a team who’ll work with you on your eLearning project from beginning to end. Here’s who you’ll be working with.

Project Manager (PM)

Your SweetRush Project Manager is your liaison and go-to point of contact for the lifecycle of your project. Once the Project Manager receives the information from the Solution Architect, that Project Manager will be your client liaison and project team lead who manages all of the in-house experts for you. Your Project Manager is responsible for the entire scope of the project, from inception to launch—both the big picture of the project and day-to-day details.

Lead Learning Experience Designer (Lead LXD)

? The Lead Learning Experience Designer takes over the role as chief architect for your home.

The role of the Lead Learning Experience Designer (Lead LXD) is to ensure the instructional integrity of the learning solution. They want to know, Are learners learning something? Are they immersed and engaged in the experience?

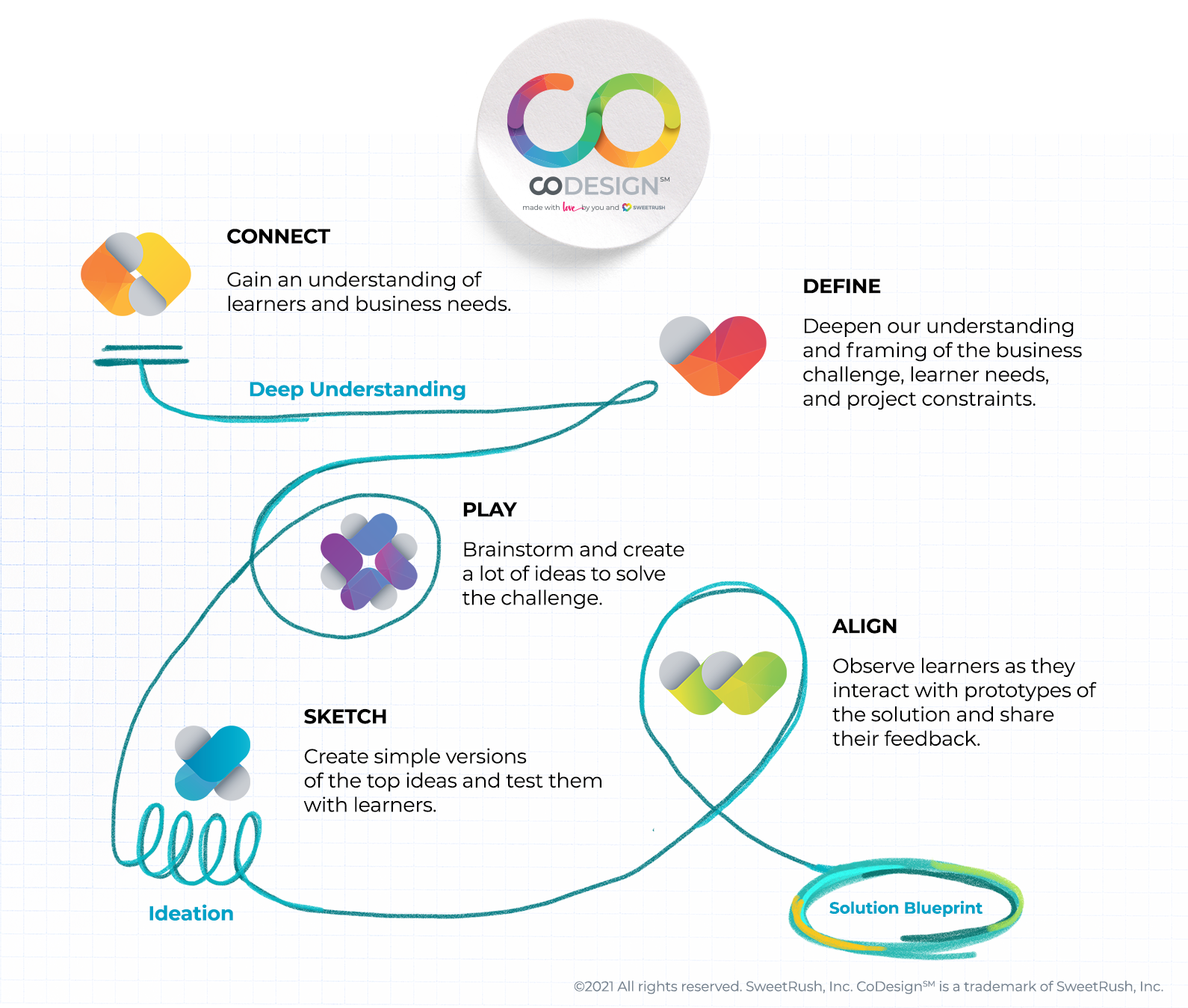

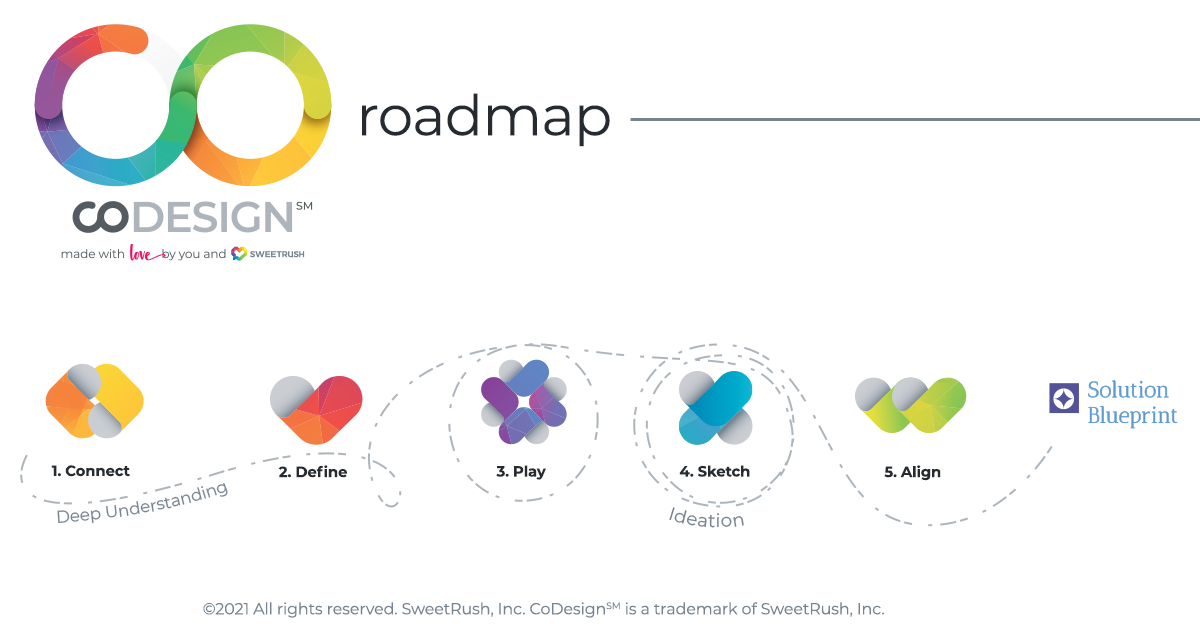

You can expect to work very closely with the Lead LXD at the beginning of the project when they’ll bug you with questions partner with you to confirm the overall business need and identify the performance gaps of your target audience. Depending on the scope of your project, the Lead LXD will create a high-level design or solution blueprint that you’ll review and sign off on (more on this later). From this point on, the Lead LXD will switch to a leadership role and will guide and support the learning experience designers (LXDs), who will take over to flesh out the high-level design into a finished solution.

Learning Experience Designer (LXD)

Learning Experience Designers (LXDs) are the folks who’ll write and create your eLearning story, complete with characters and storyboarding. In addition to being incredible LXDs, many of our team double up as subject matter experts (SMEs) with specialties in different areas such as finance, healthcare, and manufacturing. We make sure to pair the right LXDs to your project based on their background and your needs. The LXDs are guided and supported every step of the way by the Lead LXD for your project.

Creative Director

Just as the Lead LXD designs the learning solution, the Creative Director (CD) does the same for the visuals of your eLearning solution.

While we are known for creating beautiful, agency-level design, we know our solutions have to be instructionally sound. Our CDs work closely together in a crucial partnership with the Learning Experience Design team to craft beautiful, engaging learning experiences that speak authentically to your learners.

CDs provide the creative vision and design for the experience, including the characters and backgrounds that will bring your learning to life. With a laser-sharp focus on the audience, they create moodboards and style guides that adhere to your company’s brand. The CD also directs and leads the multimedia experts who build the course assets—such as videos, illustrations, and animations—that keep learners actively engaged in the experience.

Finally, the CD makes sure the user experience and interface—buttons, menu, and navigation tools—all are in line with the look, feel, and overall experience they’ve created for your custom project.

Multimedia Experts

Following the direction of the Creative Director, this pool of creative folks includes graphic designers, 2D/3D illustrators and animators, artists, and videographers.

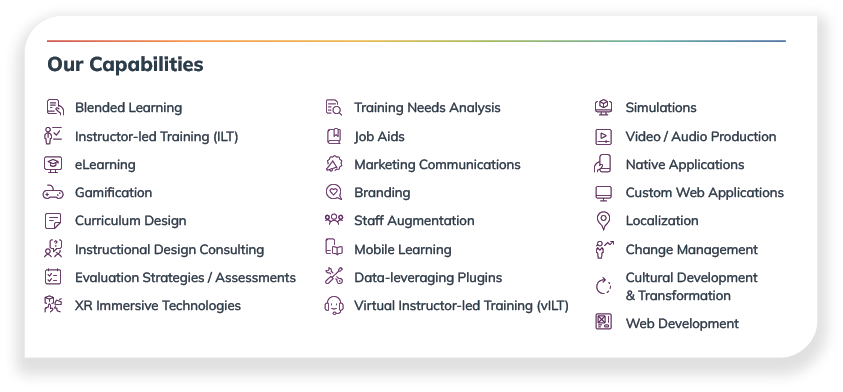

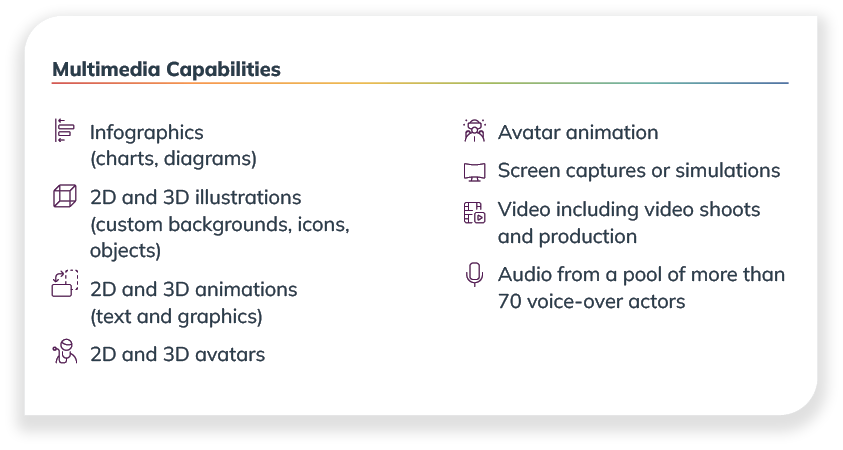

What Can Our Multimedia Experts Create?

A better question is what can’t they create. While we are an eLearning company, we have the capability to produce agency-level design. Our award-winning creative team of graphic designers, animators, illustrators, and voiceover artists can provide the following during your eLearning journey:

- Infographics (charts, diagrams)

- 2D and 3D illustrations (custom backgrounds, icons, objects)

- 2D and 3D animations (text and graphics)

- 2D and 3D avatars

- Avatar animation

- Screen captures or simulations

- Video including video shoots and production

- Audio from a pool of more than 70 voice-over actors

Lead eLearning Engineer/Developer

The Lead eLearning Engineer/Developer works with the other leads (Creative Director, Project Manager, Lead LXD) to examine the high-level design or blueprint and eventually the storyboard to make sure what’s been dreamed up can work in reality. With a focus on technology and specifically the user experience—think accessibility and interactivity—they provide the direction to actually build the solution with the help of eLearning Developers.

eLearning Developers

The engineers push the boundaries of learning technologies to customize the learning experience for you. With a solid foundation of the learning and creative strategy, they can then make suggestions on execution and solve challenges that come up.

Audio Team

The Audio Lead collaborates with the other leads on the project (Creative Director, Lead LXD, Project Manager) to understand the vision and needs of the project. With a pool of over 70 voice actors covering a range of ethnicities, accents, and genders, this in-house speciality is a strong differentiator for SweetRush. The Audio Lead will provide you with several options for characters. These accomplished voice-over actors have both extensive eLearning and commercial experience.

Copy Editor

Copy Editors adhere to your organization’s style guide. They advocate for your brand’s tone, language, and overall grammatical structure and consistency. Before the solution is even built, they make sure the text and dialogue are at the highest professional standards. Their review is thorough and detail-oriented. With Copy Editors on the team, you don’t have to worry about typos creeping in or the tone sounding unlike your organization.

Quality Assurance (QA)

The Quality Assurance team will make sure they understand exactly how you want your eLearning to be accessed by your learners (platform, browsers, etc.). They make sure your storyboard and product match, including screens, dialogue, and buttons. They also make sure that all sounds, interactions, accessibility requirements, learner evaluations and tests within the eLearning solution function properly. They perform tests and make sure that your solution works on the appropriate devices, browsers, and/or learning management systems (LMS).

What Deliverables Can I Expect During My Custom eLearning Solution Project?

Once your project is signed and underway, you can usually expect at least five deliverables from us: a Detailed Design Document, a storyboard, an alpha and beta version of your eLearning solution, and the final SCORM package.

Note that on some projects, we may work more iteratively, in which case you can expect to see solution blueprints, prototypes, visual storyboards, and much more. On blended learning projects, you’ll also receive facilitator and participant guides, presentation decks, and performance support tools. Since every project is custom, the specific details will vary, but this list gives you an idea of what you can expect as a minimum.

Deliverable #1: Detailed Design Document (DDD)

The DDD, which may go by a different name depending on your specific project, provides you with a sneak preview of the learner journey, the interactions we’re planning to use, as well as any knowledge checks or assessments. Often presented in a table format, the DDD lists the number and types of screens we’re planning to use and where each lesson or section ends and begins. You shouldn’t expect to see any specific detail, such as graphics or script, in the DDD; however, you will have a clear understanding of the structure, sequence, and flow of the solution.

Deliverable #2: Storyboard

Once the overall learner journey is complete, the LXDs get to work on the storyboard. The storyboard documents all the on- and off-screen content and action. You’ll be most interested in the on-screen content, which includes the text and visuals that will appear as well as detailed scripts for narrators and any characters. The off-screen information has everything our developers need to build the experience, including how the interactions will work and cues for animation and sound. If the experience contains branching or adaptive learning features, the storyboard will also call out these prompts and directions. Needless to say, the storyboard is a bit of a beast and can be overwhelming at first but don’t worry! We will provide tips for your review and will call out the things you can safely ignore.

Deliverables #3–5: Alpha, Beta, and final SCORM package

To get to the final product of the learning solution, we will first build the alpha version, which is a basic wireframe that first brings your vision to life so you can react to it. We need to make sure everything is there for you and that you are in agreement with the direction of the solution. You’ll likely give the most feedback during this first stage.

Once that’s approved, we move onto the next stage and build the beta, which includes feedback from the alpha. This version includes polished graphics, video, voiceover, and should be very close to the final product.

Once you review the beta and give your feedback, we can then produce the final SCORM package, which your learners can then enjoy!

How Will I Know What’s Happening? ??

How do we keep all the moving parts, people, and pieces coordinated and organized as we create your impactful custom eLearning solution? Strong project management tools, processes, and tools keep everything on track!

During the entirety of your project with SweetRush, you will have:

Personalized project dashboards ?

Welcome to your one-stop shop of information! All of your project information, tips, and guides will be located here. You don’t have to hunt and peck for different files and folders because this is your one single source of truth for the duration of the project.

Regular project meetings ???

Let’s chat! Weekly meetings with your dedicated SweetRush team will help keep the project on track and keep you informed of what’s to come. Regular communication is key to connection and efficiency.

Delivery dates ?

No surprises here. We will inform you of dates and when you will need to review with as much advance notice as possible. You will also have a wealth of client education tools to help you understand what is needed from you, how to review, and next steps needed from you. The Project Manager will work hand in hand with you (and virtually hold your hand, if needed) to make sure everything is crystal clear and set up for success! We will walk with you and guide you, clearly communicating every step of the way to make the process smooth and consistent.

How Do You Manage the Cost Once my Custom eLearning Project is Underway? ??

Ok, so you understand who’s involved and what the project lifecycle entails. Now, let’s get back to talking dollars and sense. Even the best-laid plans can sometimes veer off course. Here’s how we manage any deviation to the plan and scope. (Spoiler alert: It’s communication!)

? First things first, we work to be as transparent as possible from the get-go. We ask a lot of questions during the initial analysis phase to ensure that we can accurately scope your solution.

? Next, we develop a very detailed statement of work (SOW). And when we say detailed, we mean super detailed. We list every deliverable, resource, and asset and provide a breakdown that shows you exactly what you can expect to receive at the end of the project.

? The SOW is then used alongside a budget tracker to record small changes throughout the project’s lifecycle. This allows us to identify in real time where and when any small decisions begin to add up and could place us at risk of getting off course (aka change order territory).

With these best practices in place, we can work with you to identify, mitigate, and communicate these risks before they happen! And we’ll work with you to resolve any challenges that arise. We are in this together as partners!

With an all-star team of learning experts guiding and listening to you each step of the way, tools to keep everyone in sync, and healthy communication, your dream is in great hands. Together, we will build the custom eLearning solution that your organization needs to thrive.

Ready to start building? We’d love to hear from you!

More Resources to Help You Decide If a SweetRush Custom eLearning Solution Is Right for You

Still digging around for information?

If you’re not quite ready to make a decision yet and are still in research mode, we’ve also got your back with these articles.

? Want to know the price tag? Read What Does a Custom SweetRush eLearning Solution Cost? to peek behind the curtain on cost calculations.

? Looking for a lower cost option? Read Does SweetRush Offer any Lower-Cost Options for Custom eLearning? to find alternative solutions to fit your budget.

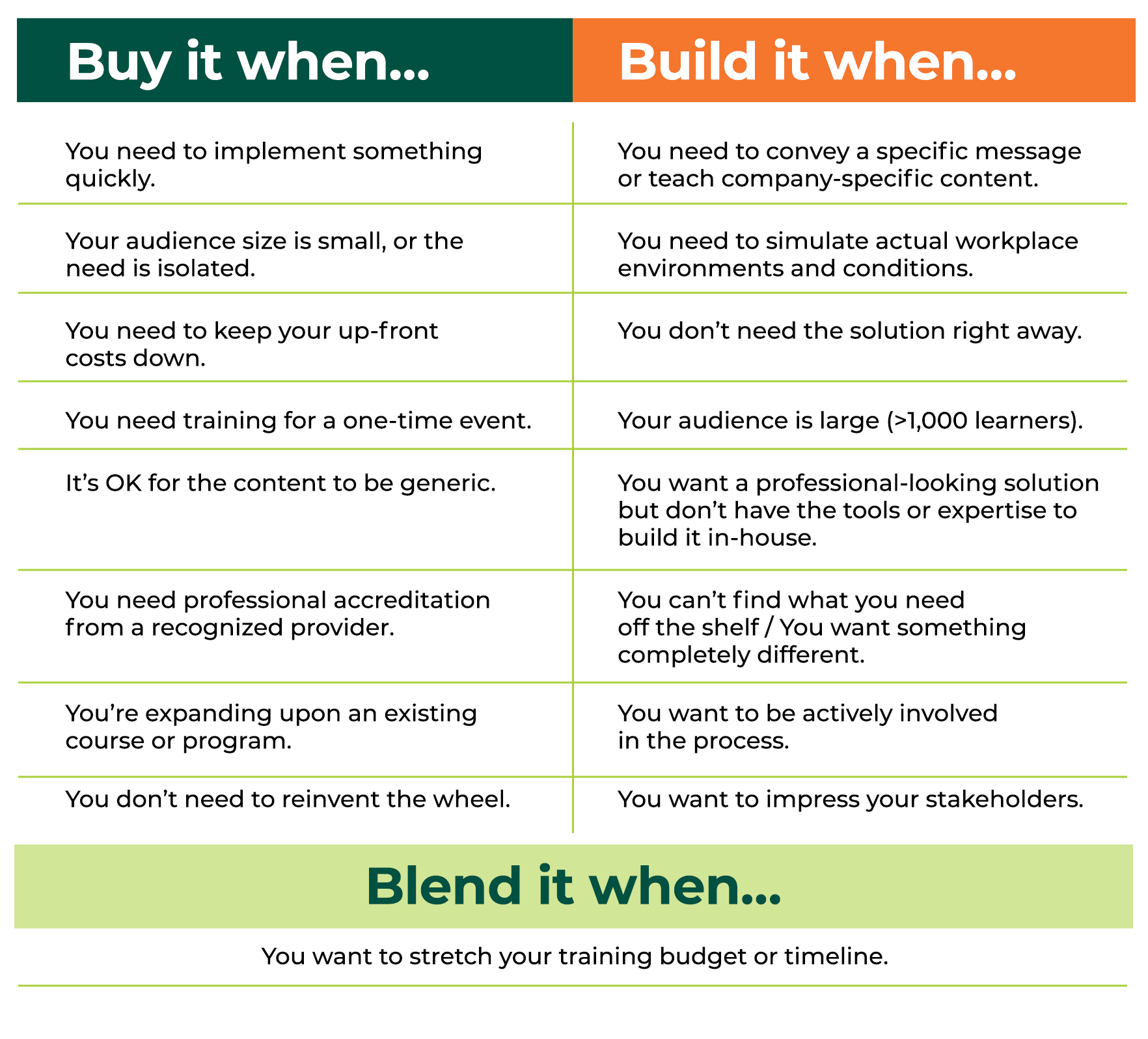

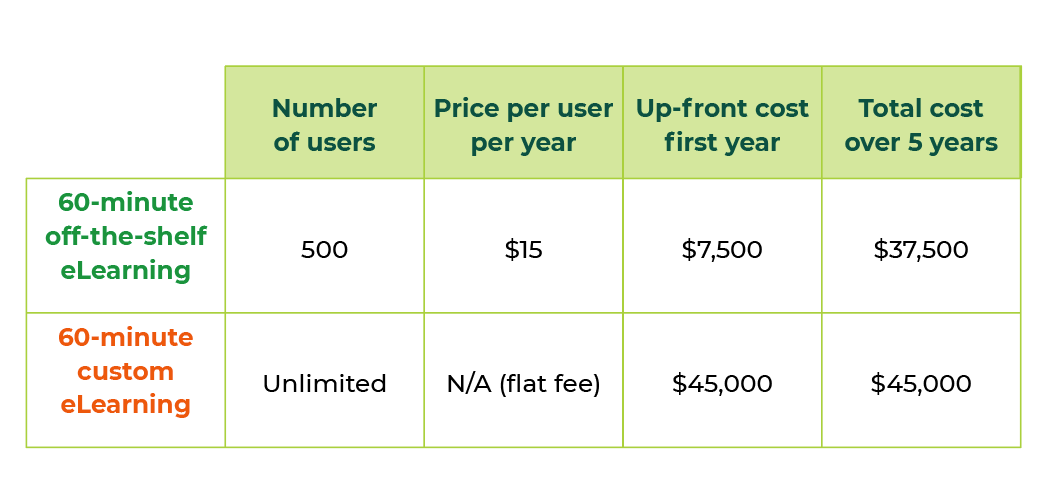

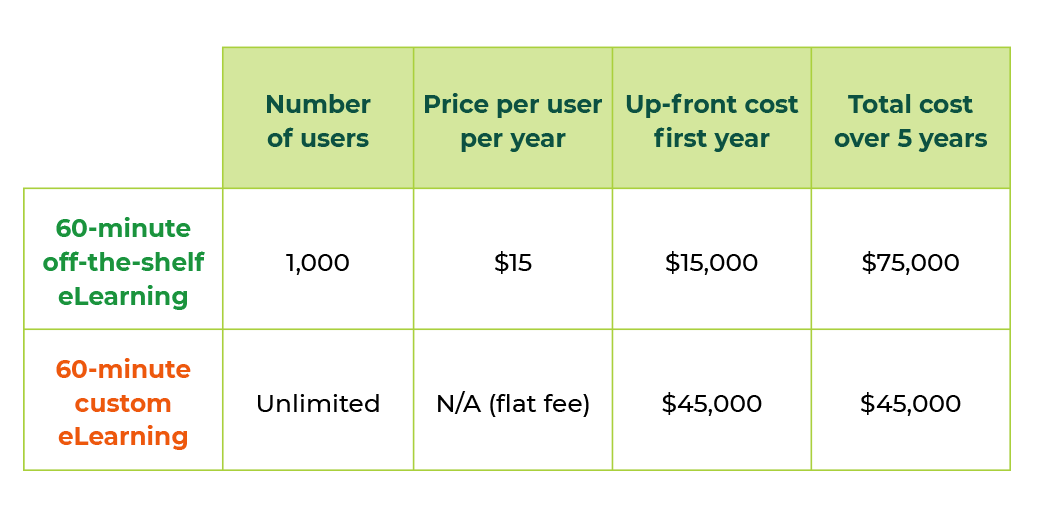

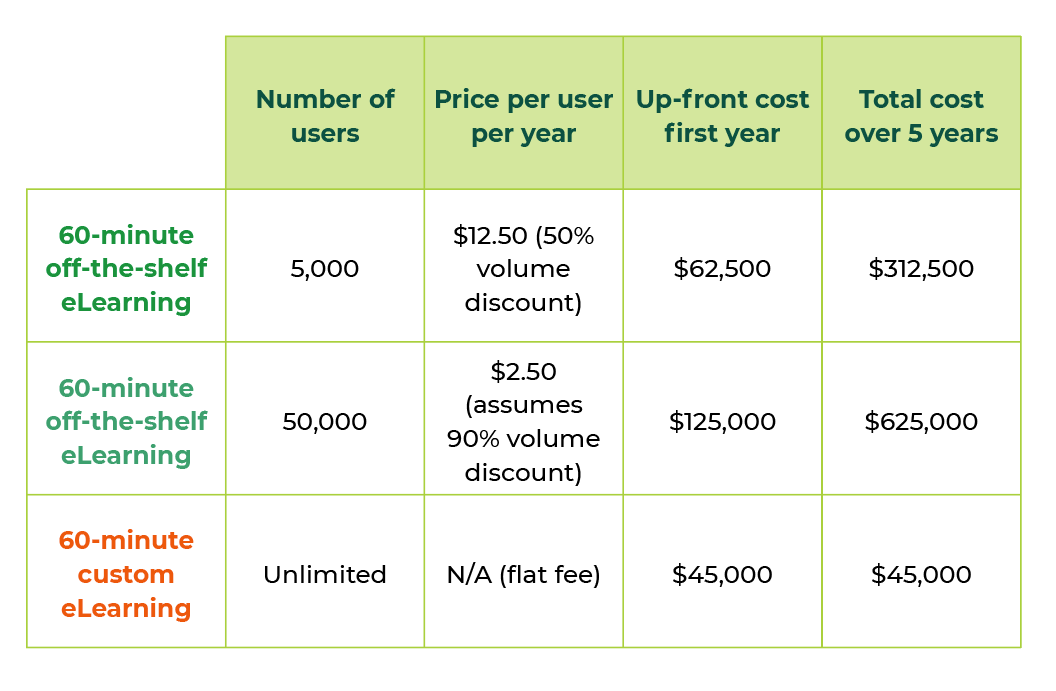

? Weighing the benefits of custom eLearning vs. off-the-shelf solutions?

Read Off-the-Shelf vs. Custom eLearning Solutions: Which Is Best? for an honest look at the pros and cons of each option.

Read Buy, Build, or Blend? Use Cases for Off-the-Shelf and Custom eLearning to find out when it makes the most sense to buy off-the-shelf vs. building custom learning vs. a blend of both options.

Send us your questions

Did we miss anything? What other questions would you like us to answer? What other articles can we create to help you make more informed decisions?

Get in touch and we’ll do our best to answer them for you!